Sharing notes from my ongoing learning journey — what I build, break and understand along the way.

Implementing High Availability: Docker & Nginx Load Balancer

Implementing High Availability: A Load Balancer Architecture with Docker and Nginx

In modern IT infrastructures, a Single Point of Failure (SPOF) is an unacceptable risk. Enterprise system sustainability relies heavily on distributing traffic across multiple servers (Load Balancing) and ensuring the system continues to operate seamlessly even if a server fails (Fault Tolerance).

In this project, I simulated an industry-standard High Availability (HA) architecture using Docker and Nginx. This documentation covers the implementation of the Round-Robin algorithm, container network isolation, and the technical analysis of client-side caching behaviors.

Architecture and Industry Context

In enterprise environments, load balancing is typically handled by solutions like F5, HAProxy, or AWS ELB. At its core, this relies on the Reverse Proxy mechanism. The architecture designed for this project consists of:

- Backend Nodes (3x): Web servers responsible for handling client requests.

- Load Balancer (Nginx): The central gateway managing and distributing traffic.

The primary advantage of this architecture is Horizontal Scaling. As traffic increases, the system can handle the load by simply spinning up additional container nodes without altering the core infrastructure.

Technical Infrastructure and Configuration

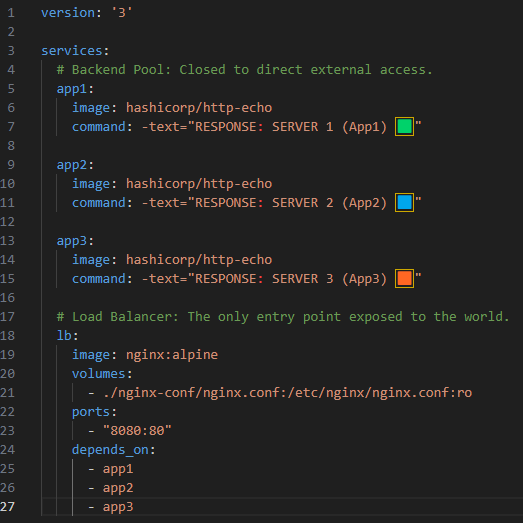

docker-compose was selected for orchestration, allowing for the management of network isolation and service dependencies within a single configuration file.

1. Network Isolation and Security (Docker Compose)

A critical security practice was applied in the infrastructure definition: The ports of the backend servers are not exposed to the host machine (public network). Access is strictly permitted only through the Load Balancer. This adheres to the “Defense in Depth” principle by minimizing the attack surface.

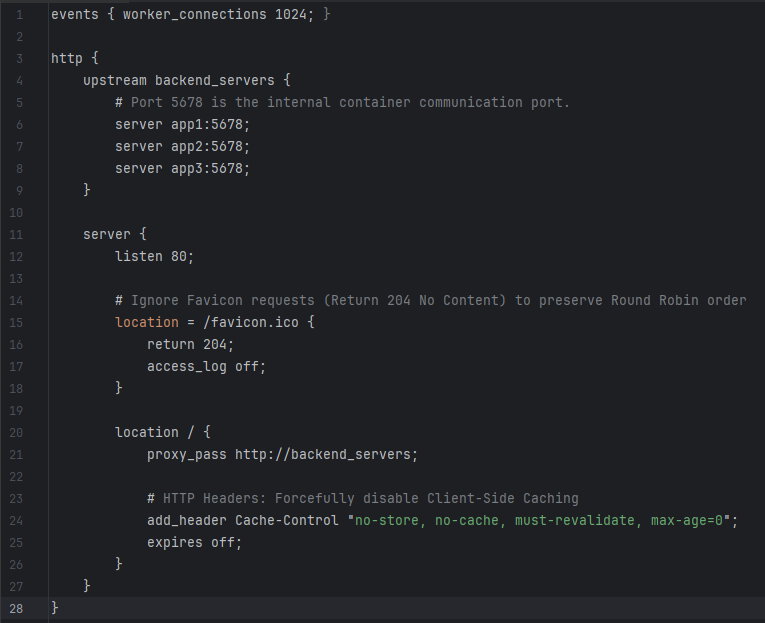

2. Traffic Management and “Round Robin” (Nginx)

Nginx utilizes the Round Robin algorithm by default. However, during the testing phase, two critical client-side technical issues were identified, requiring specific configuration overrides:

- Caching Issues: Modern browsers aggressively cache static responses, preventing the verification of proper load distribution.

- Favicon Requests: Browsers automatically request

/favicon.icoon every refresh, which shifts the Round Robin sequence and distorts test results.

The following configuration enforces “pure” traffic management by mitigating these client behaviors:

Implementation and Verification Tests

The deployment and stability tests were conducted using the following methodology.

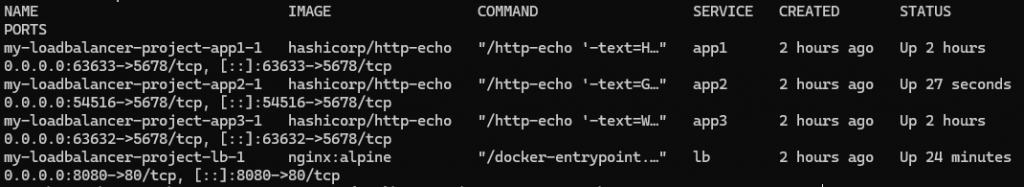

1. Deployment:

docker-compose up -d

Post-execution verification confirmed that all services reached the “Up” state.

2. Load Distribution Test: Sequential requests made via the browser confirmed that Nginx correctly distributed traffic in the order of App1 -> App2 -> App3. The injection of HTTP Headers successfully prevented browser caching, ensuring real-time response data.

3. Failover (Fault Tolerance) Simulation: To test the resilience of the system, the App2 service was manually terminated to simulate a server crash.

docker-compose stop app2

Result: Despite App2 being offline, Nginx’s Passive Health Check mechanism immediately detected the failure. Traffic was instantly rerouted to the remaining healthy nodes (App1 and App3). The client experienced no HTTP 500 errors or service interruptions.

Technical Key Takeaways

This project provided practical experience in the fundamental building blocks of system integration beyond simple web server configuration:

- Log Analysis: The initial

502 Bad Gatewayerror encountered during development was diagnosed via log analysis, revealing a missing port definition in the upstream block. - Client Behaviors: Gained insight into how Server-side configurations (Headers) must be utilized to manipulate Client-side behaviors (Caching, Favicon) for accurate testing.

- Service Continuity: Demonstrated the critical role of container architecture in maintaining system integrity during hardware or software failures.